As AI becomes the third hand for workers and consumers alike, one can push the boundaries of everything it has learned on, but AI is fallible. To understand this, lets look back at the last time “AI is taking over “ was the rage and how it ended up tripping over itself.

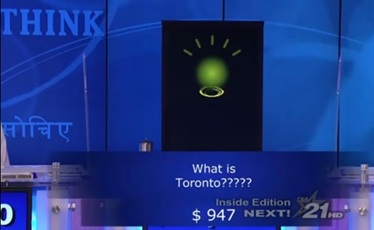

In January 2011, Watson was competing against the best humans Jeopardy had seen including now co-host Ken Jennings. At the end of the episode for Final Jeopardy in the category of U.S. Cities, the clue was “Its largest airport was named for a World War II hero; its second largest, for a World War II battle”. The answer Watson came up with was Toronto. The implication being Toronto, Ontario, Canada which would certainly not be a US city. Luckily for Watson, it didn’t believe it had a good understanding of the subject and wagered a mere $947.

With the improvements in software and hardware, today such a mistake seems unlikely but as the GPT systems of today can start going down a path of their own, they are still capable of mistakes. Sometimes the mistakes can be from taking a nonfactual source as truth, or conflicting pieces of information which would leave doubt in people becomes a best effort truth for AI. Intentional errors from bad sources or self-selected sources can result in a view that is one sided but does not represent the truth to humans.

As AI becomes a must have in the day-to-day operations, users must remember that though they will want to take results as fact, they also need to consider if the answer is just wrong.